The morning kicked off with a warm welcome from the GDG DevFest organizers Naveen, Hema, and Rishy, who shared some fascinating insights about the community’s journey.

Did you know that what we now know as GDG (Google Developer Groups) actually started as GTUG (Google Technology User Groups) 15 years ago?

They set the tone for the day with a clear call to action: every attendee should leave with at least one AI-related action item to implement.

Keynote - “The Road to Transformer and Beyond.”

As someone who’s been with Google for 10 years and has actually attended the very first DevFest back in 2013, Muthu started with his memeories of the event from the past.

How Did We Get Here? The Technical Breakthroughs

Muthu started putting into context (pun intended) How did we evolve from simple text processing to AI systems that can understand our intent and become true collaborators?

The Early Days: N-grams and Statistical Models

It starts with N-grams, one of the earliest approaches to language modeling. These were statistical models that looked at sequences of words to predict what might come next. While groundbreaking for their time, they were limited in understanding context and meaning.

Functions Describe the World!

Muthu made an elegant point about the nature of mathematics and functions. Just as mathematical functions describe the physical world, we needed functions that could describe language, similarity, and meaning. This led us to the revolutionary concept of using neural networks as function approximators.

Neural Networks: Learning Complex Functions

Neural networks emerged as powerful function approximators with a simple yet effective structure: input layers, hidden layers, and output layers. Muthu emphasized a crucial insight that drives much of modern AI: the world is fundamentally non-linear, and we need models that can handle this complexity.

Deep Neural Networks: Tackling Even Greater Complexity

As our computational capabilities grew, Deep neural networks allowed us to learn increasingly complex functions, pushing the boundaries of what machines could understand and process.

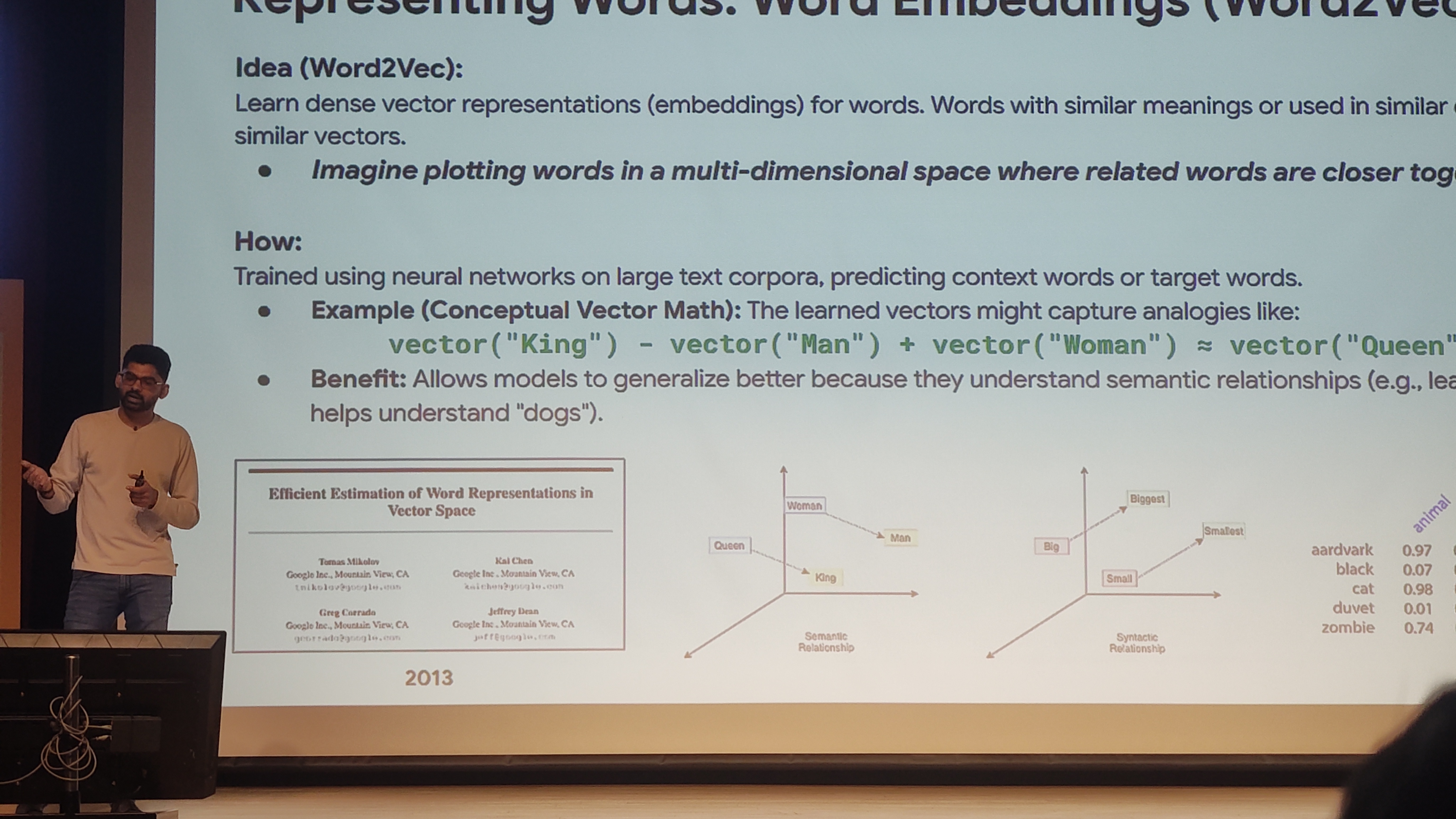

Representing Words: The Word2Vec Revolution

A significant breakthrough came with word embeddings, particularly Word2Vec. This innovation allowed us to represent words as vectors in a high-dimensional space, capturing semantic relationships between them. Suddenly, our models could understand that “king” is to “queen” as “man” is to “woman”—a conceptual leap that transformed natural language processing.

But with meaning established, we faced a new challenge: memory. How could our models remember and contextually use information over longer sequences?

Remembering the Past: Recurrent Neural Networks

Recurrent Neural Networks (RNNs) were designed to address this memory limitation by introducing loops that allowed information to persist. However, they struggled with the vanishing gradient problem, making it difficult to learn long-range dependencies.

Improving RNN Memory: The LSTM Breakthrough

Long Short-Term Memory (LSTM) networks emerged as a solution to RNN’s memory limitations. With their sophisticated gating mechanisms, LSTMs could better remember and forget information, making them much more effective at handling longer sequences.

Seq2Seq with RNNs: Understanding Translation

The sequence-to-sequence (Seq2Seq) architecture revolutionized tasks like translation. Muthu used a compelling analogy: to listen and speak effectively, you need two minds—one for processing input and another for generating output.

Focusing on What Matters: The Attention Mechanism

Despite these advances, there was still a fundamental limitation. “The world is too vast to see, you must focus.” The attention mechanism allowed models to selectively focus on different parts of the input when generating each part of the output, much like how humans pay attention to specific details when processing information.

”Attention Is All You Need”: The Transformer Revolution

This led to the groundbreaking insight that “attention is all you need.” The key realization was that the world doesn’t happen in sequence, we need models that can process information in parallel and understand the relationships between different parts of the input, regardless of their position.

As Muthu emphasized, when you combine all these innovation:

word embeddings, attention mechanisms, and parallel processing, you get the revolution that transformed AI: Transformers.

This architecture didn’t just improve existing models; it fundamentally changed what was possible, enabling the large language models that power today’s AI applications and setting the stage for the next generation of artificial intelligence systems.