This session was a fascinating look at how to bridge the gap between powerful cloud-based AI models and efficient on-device AI applications, focused on practical strategies for leveraging Google’s Gemini API and Gemma models to create intelligent, privacy-focused applications that work seamlessly across devices.

Understanding the AI Model Landscape: LLMs vs. SLMs

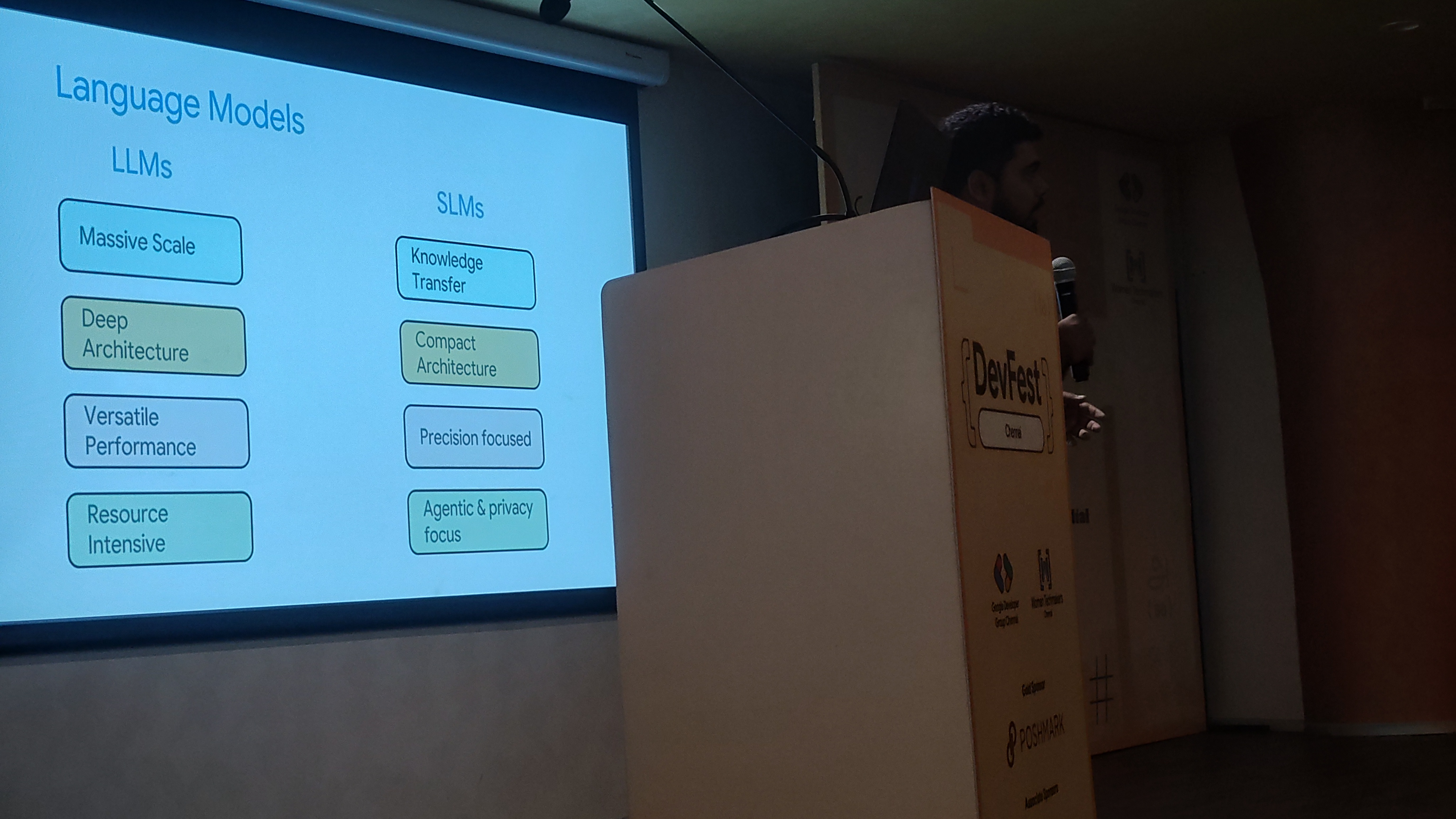

Mayur began by clarifying the fundamental differences between Large Language Models (LLMs) and Small Language Models (SLMs):

Large Language Models (LLMs)

- Massive Scale: Hundreds of billions of parameters

- Deep Architecture: Sophisticated neural network designs

- Versatile Performance: Excel across a wide range of tasks

- Resource Intensive: Require significant computational power and infrastructure

Small Language Models (SLMs)

- Knowledge Transfer: Trained on knowledge distilled from larger models

- Compact Architecture: Optimized for efficiency and speed

- Precision Focused: Specialized for specific tasks or domains

- Agentic & Privacy Focused: Designed to run locally and protect user data

Mayur explained that Gemma models are essentially “trained on Gemini,” representing a form of transfer learning where the capabilities of large models are distilled into smaller, more efficient versions. This approach is gaining traction with models like SmolLM and ShaktiLM, which prioritize efficiency without sacrificing too much capability.

Gemma Models: Technical Deep Dive

Mayur provided an insightful look at the technical architecture that makes Gemma models special:

Key Architectural Features

- Decoder-Only Design: Simplified architecture optimized for text generation

- Advanced Attention Mechanisms: Efficient attention patterns that reduce computational overhead

- Shared Embeddings: Space-efficient approach to representing text

- Vision-Language Capabilities: Multi-modal support for processing both text and images

- Matformer Innovations: Google’s latest architectural improvements for efficiency

The Model Adaptation Imperative: Challenges in Mobile AI

Transitioning from cloud-based models to on-device applications presents several critical challenges outlined as the “Model Adaptation Imperative”:

the challenges are:

1. Domain Mismatch

Pre-trained models often don’t understand the specific terminology, context, and patterns relevant to your particular application domain. A general-purpose model might struggle with medical terminology, legal language, or specialized technical jargon.

2. Static Knowledge

Models trained at a specific point in time have no awareness of events, information, or developments that occurred after their training cutoff. This is particularly problematic for applications requiring current information.

3. Deployment Constraints

Mobile and edge devices have strict limitations on memory, processing power, battery life, and storage. Models must be optimized to work within these constraints while maintaining acceptable performance.

Parametric Knowledge Adaptation: Making Models Smarter

Mayur introduced several approaches used to address these challenges, starting with Parametric Knowledge Adaptation techniques that modify the model’s internal parameters:

Domain Adaptive Pre-Training (DAPT)

This involves further pre-training the model on domain-specific data to help it understand the terminology, context, and patterns of your target domain.

Supervised Fine-Tuning (SFT)

Using labeled examples to teach the model how to perform specific tasks or respond appropriately in particular situations.

Parameter-Efficient Fine-Tuning (PEFT) with LoRA

Low-Rank Adaptation (LoRA) is particularly exciting because it allows for efficient domain adaptation without requiring full model retraining. Mayur emphasized that this is “ideal for efficient domain shift,” enabling developers to customize models for specific use cases with minimal computational overhead.

Semi-Parametric Adaptation: External Knowledge Integration

Beyond modifying the model itself, Mayur discussed Semi-Parametric Adaptation approaches that enhance model capabilities by connecting them to external knowledge sources:

Retrieval-Augmented Generation (RAG)

This approach allows models to access and incorporate information from external databases or documents in real-time, effectively solving the static knowledge problem.

Agent-Based Systems

By integrating models into agent frameworks, we can create systems that can actively seek out current information and perform complex tasks beyond simple text generation.

Mayur noted that these approaches are “ideal for real-time knowledge” requirements, ensuring that AI systems always have access to the most current information.

Prompt Engineering Strategies for Maximum Effectiveness

Mayur shared some proven techniques for getting the best performance from adapted models:

Few-Shot Prompting

Providing the model with a few examples of the desired input-output pattern helps it understand exactly what you’re looking for, significantly improving performance on specific tasks.

Chain-of-Thought Prompting

This technique encourages the model to “think out loud,” breaking down complex problems into step-by-step reasoning. This not only improves accuracy but also makes the model’s decision-making process more transparent and debuggable.

Practical Implementation: LoRA and Supervised Fine-Tuning

The “main part” of Mayur’s presentation focused on the practical implementation of these adaptation techniques. He explained how Low-Rank Adaptation (LoRA) works in practice:

LoRA involves freezing the pre-trained model weights and training small, low-rank matrices that modify the model’s behavior. This approach is incredibly efficient because:

- It requires far less training data than full fine-tuning

- The computational overhead is minimal

- Multiple LoRA adapters can be created and switched between dynamically

When combined with Supervised Fine-Tuning, developers can create highly specialized models that excel at specific tasks while maintaining the broad capabilities of the base model.

The Power of Differential Adapters

One of the most exciting concepts Mayur introduced was the idea of using different adapters with Gemma models to serve different use cases. This means a single base model can be rapidly adapted for:

- Customer Service: Optimized for helpful, empathetic responses

- Content Creation: Fine-tuned for creative writing and storytelling

- Technical Documentation: Specialized for clear, accurate technical explanations

- Education: Adapted for teaching and explanation tasks

Real-World Demonstration: Microfables

To make these concepts concrete, Mayur shared a working example he developed called Microfables. The project demonstrates how to effectively combine cloud-based Gemini API capabilities with on-device Gemma models to create engaging, interactive story applications. He provided a GitHub link (github.com/mayurmadnani/Microfables) for attendees to explore the implementation details.

This practical example showed how the theoretical concepts translate into real applications that can refine and adapt stories while maintaining privacy through on-device processing when needed, and leveraging cloud capabilities when available.

Mayur’s session provided a comprehensive roadmap for developers looking to build the next generation of AI applications that seamlessly blend the power of cloud models with the privacy and efficiency of on-device processing.