This lightning tour was planned by Sairam Sundaresan, an Engineering Leader, taking us through the evolution and architecture of Vision Language Models (VLMs), highlighting key breakthroughs and the challenges that lie ahead in this exciting field.

Fun fact! He is the author of the book “AI for the Rest of Us,“

How the blind poet got eyesight

Sairam opened with examples like Google Lens and Google Photos as early manifestations of this capability, but the journey to get here has been long and complex.

The Semantic Gap Challenge

One of the fundamental problems in computer vision has always been the semantic gap the difference between what we can computationally extract from visual data and what a human can understand.

Any guesses what a “frondle” is ?

Try to visualize and predict what a “frondle” would be. The answer, of course, is nothing—because “frondle” isn’t a real thing. BUT, We have our own guesses right ?

This demonstrates how human language and visual understanding are deeply interconnected, and how AI systems need to bridge this gap.

Building the Bridge: One Architecture to Rule Them All

The path to modern VLMs has been marked by several architectural revolutions. Sairam walked us through the evolution:

The CNN Era

For years, Convolutional Neural Networks (CNNs) were the go-to architecture for computer vision tasks. They excelled at detecting patterns and features in images but struggled with understanding context and sequential relationships.

The RNN Limitation

Recurrent Neural Networks (RNNs) emerged as a solution for processing sequences, but they had significant limitations with context understanding. Their sequential nature also made them difficult to parallelize for training on modern hardware.

The LSTM Improvement

Long Short-Term Memory (LSTM) networks addressed some of RNN’s memory limitations but suffered from vanishing gradient problems and remained inherently sequential, limiting their scalability.

The Need for Hybrid Approaches

The community recognized that something like a CNN-LSTM hybrid was needed—combining the spatial understanding of CNNs with the sequential processing capabilities of LSTMs. But the real breakthrough came with a different approach entirely.

The Transformer Revolution

The landmark paper “Attention Is All You Need” changed everything. Transformers brought several key advantages:

- Context Understanding: Superior ability to understand relationships between different parts of the input

- Parallel Processing: Unlike RNNs, transformers can process all tokens simultaneously

- Scalability: Better suited for modern hardware and large-scale training

2020 Breakthrough: Vision Transformers (ViT)

A pivotal moment came in 2020 with the paper “An Image is Worth 16x16 Words” (ViT). This breakthrough showed that transformers could be applied not just to text but to images as well. The approach was elegant: break images into patches (16x16 pixel squares) and treat them like word tokens.

While this approach potentially risks losing spatial context, Sairam explained that positional encoding helps maintain awareness of where each patch came from in the original image. Adding visual information this way creates what’s called grounding—connecting language understanding to visual reality.

How We Build Modern Vision Language Models

Sairam simplified the complex architecture of VLMs into three intuitive components:

- The Eye: Vision encoder that processes images

- The Translator: Cross-modal attention mechanisms

- The Brain: Language model that generates text understanding

The key technical challenge is multimodal fusion—how to effectively combine visual and textual information. Sairam outlined three main strategies:

Early Fusion

Combine visual and text information at the input level, processing them together from the start.

Intermediate Fusion

Process modalities separately initially, then combine them in the middle layers of the network.

Late Fusion

Process visual and text information independently through separate networks, only combining them at the final output stage.

Revolutionary Approaches in VLM Development

Sairam then walked us through several groundbreaking approaches that have shaped modern VLMs:

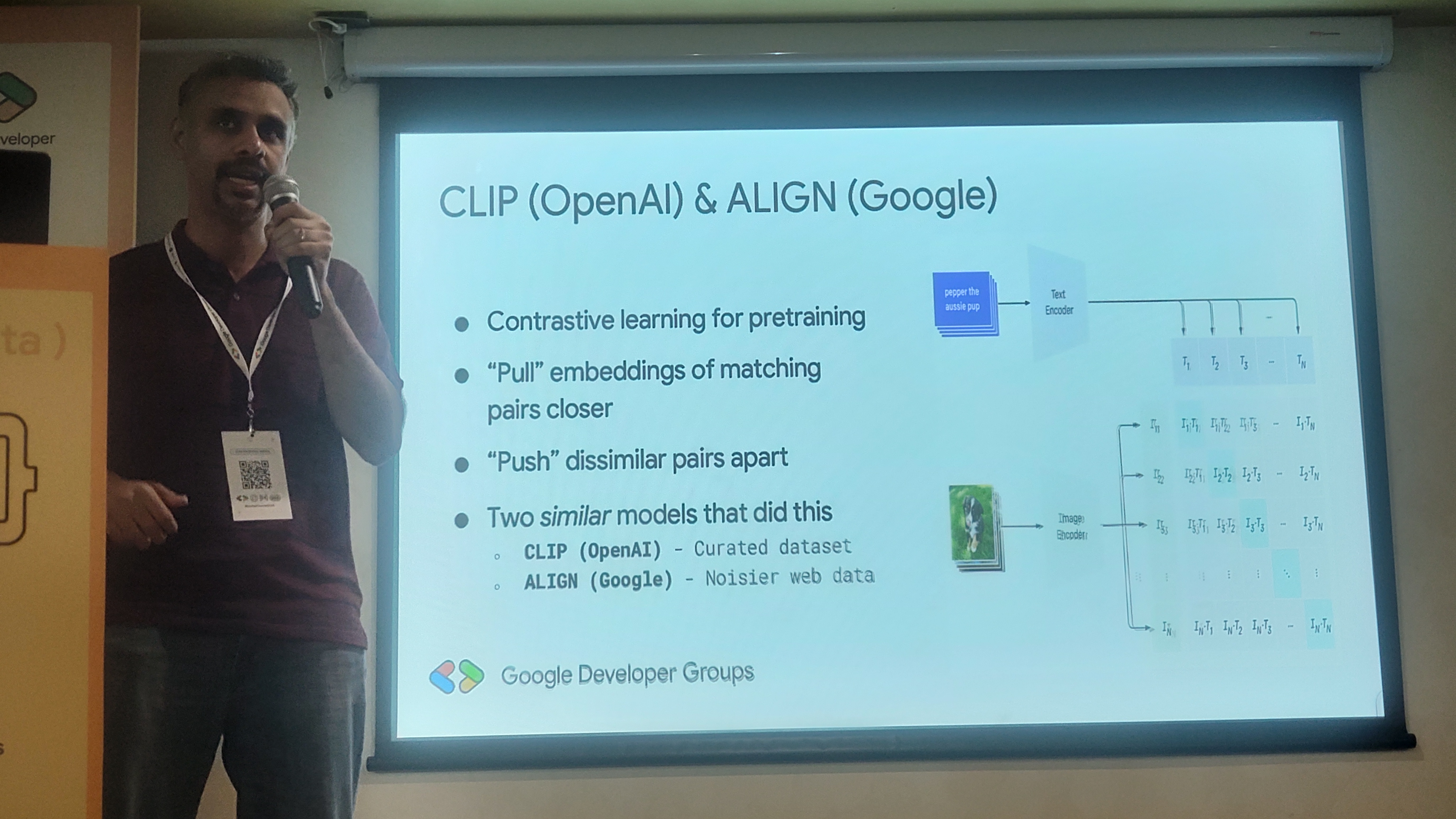

CLIP (OpenAI) & ALIGN (Google)

These models introduced contrastive learning for pretraining. The concept is brilliant: pull the embeddings of matching image-text pairs closer together while pushing dissimilar pairs apart. This creates a shared space where related images and text naturally cluster together.

SimVLM

Google’s SimVLM embraced “simplicity at scale” with a PrefixLM training objective. This approach showed that sometimes the most straightforward methods can be surprisingly effective when scaled properly.

Flamingo (Google)

Flamingo introduced a clever combination of few-shot learning with selective training. The key innovation was freezing the pretrained vision and language models, then adding a trainable projector that learns to bridge between them. Only this bridging component is trained, making the process highly efficient.

Cross-Attention Mechanism

This allows the model to pay attention to different parts of the visual and textual inputs when generating each part of the output, much like how humans look at specific details when describing a scene.

PaLI (Google)

PaLI represents a “united vision-language culture,” bringing together the best of both worlds in a single, cohesive architecture.

Gemini: The Unified Mind

Sairam built up to what might be the culmination of this journey: Google’s Gemini model. Unlike previous models that combined separate vision and language components, Gemini is natively multimodal—designed from the ground up to understand and process multiple types of information seamlessly.

This unified approach represents a significant step forward, creating models that can naturally flow between seeing, reading, reasoning, and generating content across modalities.

Challenges on the Road Ahead

Despite the remarkable progress, Sairam emphasized that we’re still facing significant challenges:

From VLM to VLA (Vision-Language-Action)

The next frontier is moving from understanding to action—Vision-Language-Action models that can not only comprehend visual information but take meaningful actions based on that understanding.

The Hallucination Problem

Like all large AI models, VLMs can suffer from hallucinations—generating descriptions or details that aren’t actually present in the images they’re analyzing. This remains a critical challenge for real-world applications.

Typographic Attacks

One particularly concerning vulnerability Sairam highlighted was typographic attacks, where specially designed text in images can confuse models and cause them to misinterpret what they’re seeing.

Sairam’s lightning tour provided both historical context and forward-looking perspective, helping the audience appreciate not just how far we’ve come in teaching AI to see, but also the exciting challenges that lie ahead in creating truly perceptive and reliable vision-language systems.

This was definitely a session to remember!