One of the most critical aspects of artificial intelligence is memory. This session delved into how memory systems work in agentic AI, drawing parallels with human cognitive processes and demonstrating practical implementations using Google’s Vertex AI platform.

Understanding AI Agents: The Foundation

Vipin began by establishing what we mean by AI agents in today’s context. An AI agent consists of three core components:

- LLM (Large Language Model): The reasoning engine that processes information and generates responses

- Tools: External capabilities that allow the agent to interact with systems and take actions

- Memory: The ability to store, retrieve, and learn from past interactions and information

Why Memory Matters ?

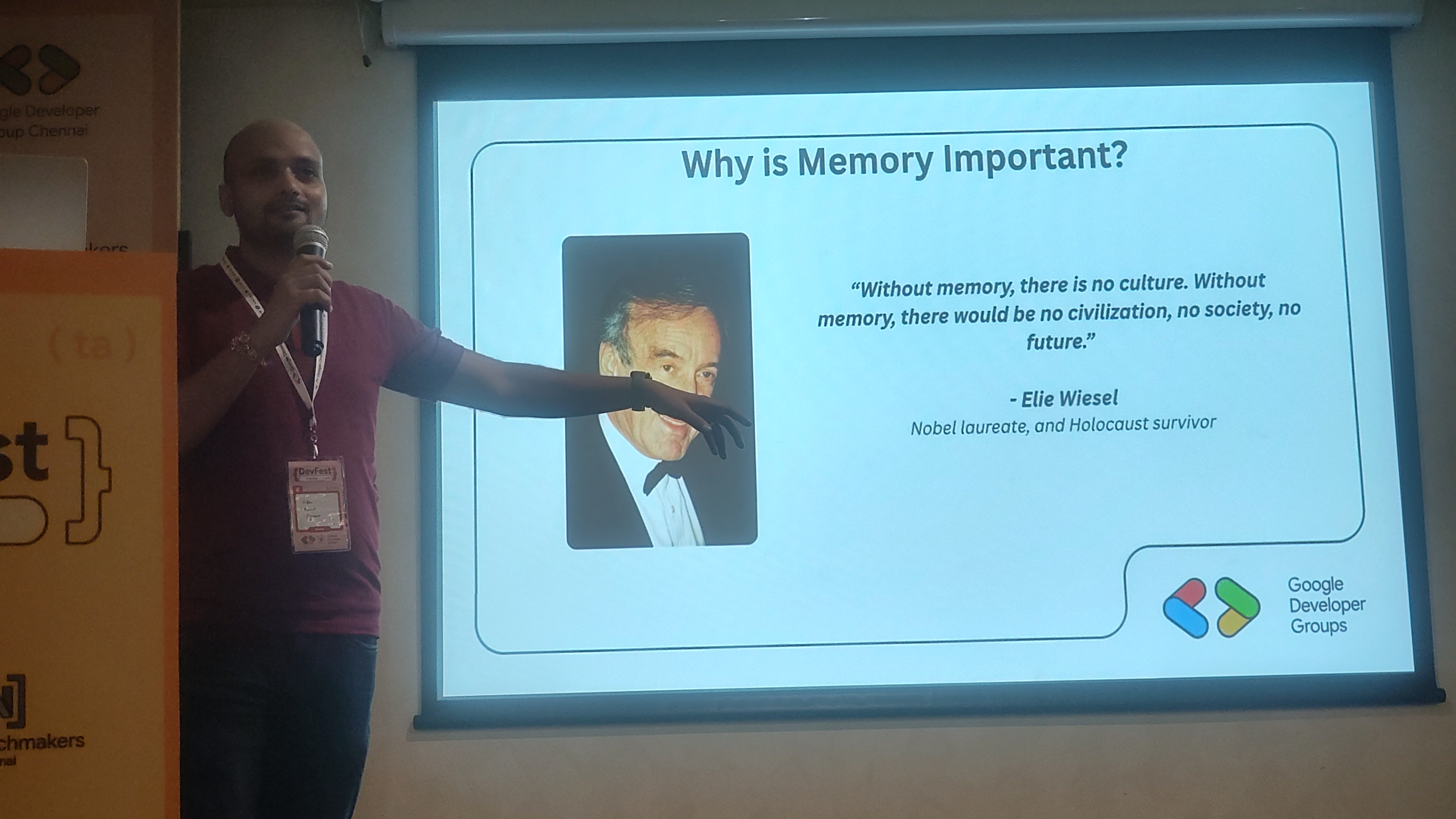

To set the stage, Vipin referenced Nobel laureate Elie Wiesel’s profound insights on memory:

“Without memory, there is no culture. Without memory, there would be no civilization, no society, no future.”

This philosophical framing highlighted why memory isn’t just a technical consideration, it’s fundamental to creating intelligent systems that can learn, adapt, and maintain context over time.

Human Memory: Nature’s Template

Before diving into technical implementations, Vipin provided a fascinating overview of how human memory works, serving as inspiration for AI memory systems:

Basic Memory Types

- Short-term Memory: Temporary storage for immediate tasks

- Long-term Memory: Persistent storage for knowledge and experiences

Detailed Memory Architecture

Working Memory Systems

- Sensory Memory: Brief retention of sensory information

- Working Memory: Active processing and manipulation of information

Explicit (Conscious) Memory

- Episodic Memory: Personal experiences and specific events

- Semantic Memory: General knowledge and facts about the world

Implicit (Unconscious) Memory

- Procedural Memory: Skills and habits that operate automatically

This comprehensive understanding of human memory provides a blueprint for designing more sophisticated AI memory systems.

Memory in Large Language Models: Current State

Vipin then turned his attention to how memory currently works in LLM-based systems:

Short-term Memory: The Context Window

Current LLMs primarily rely on their context window for short-term memory. This allows them to maintain awareness of recent conversations and immediate context, but it has significant limitations in terms of capacity and persistence.

Long-term Memory: Two Approaches

Core LLM Memory

- Procedural Memory: Built into the model through training, enabling language understanding and reasoning

- Reinforcement Learning: Ongoing learning from feedback and interactions

External Memory Systems

This is where modern AI systems are breaking new ground:

Semantic Memory Storage:

- Vector Databases: Store information as high-dimensional vectors for efficient similarity search

- Graph Databases: Maintain relationships between different pieces of information

Episodic Memory Storage:

- Vector Databases with RAG: Store conversation histories and specific events

- Retrieval-Augmented Generation: Allow agents to recall specific past interactions

The Technical Challenges: Why Memory is Hard

Despite these advances, Vipin emphasized that implementing effective memory in AI agents faces several significant challenges:

LLM Context Window Limitations

The fixed size of context windows means agents can only maintain awareness of a limited amount of information at any given time.

Attention Mechanism Degradation

Even within the context window, the effectiveness of attention mechanisms can degrade as the amount of information increases, leading to poorer performance on earlier parts of long conversations.

Conflicting and Distracting Information

Agents struggle to prioritize relevant information when faced with conflicting details or when important context is buried among less relevant data.

Chaotic Dynamic Environments

Real-world environments are constantly changing, making it challenging to maintain accurate and current memory representations.

The “Lost in the Middle” Problem

Perhaps most critically, Vipin highlighted the “Lost in the Middle” phenomenon—LLMs tend to pay more attention to information at the beginning and end of their context window, while struggling to effectively utilize information in the middle portions.

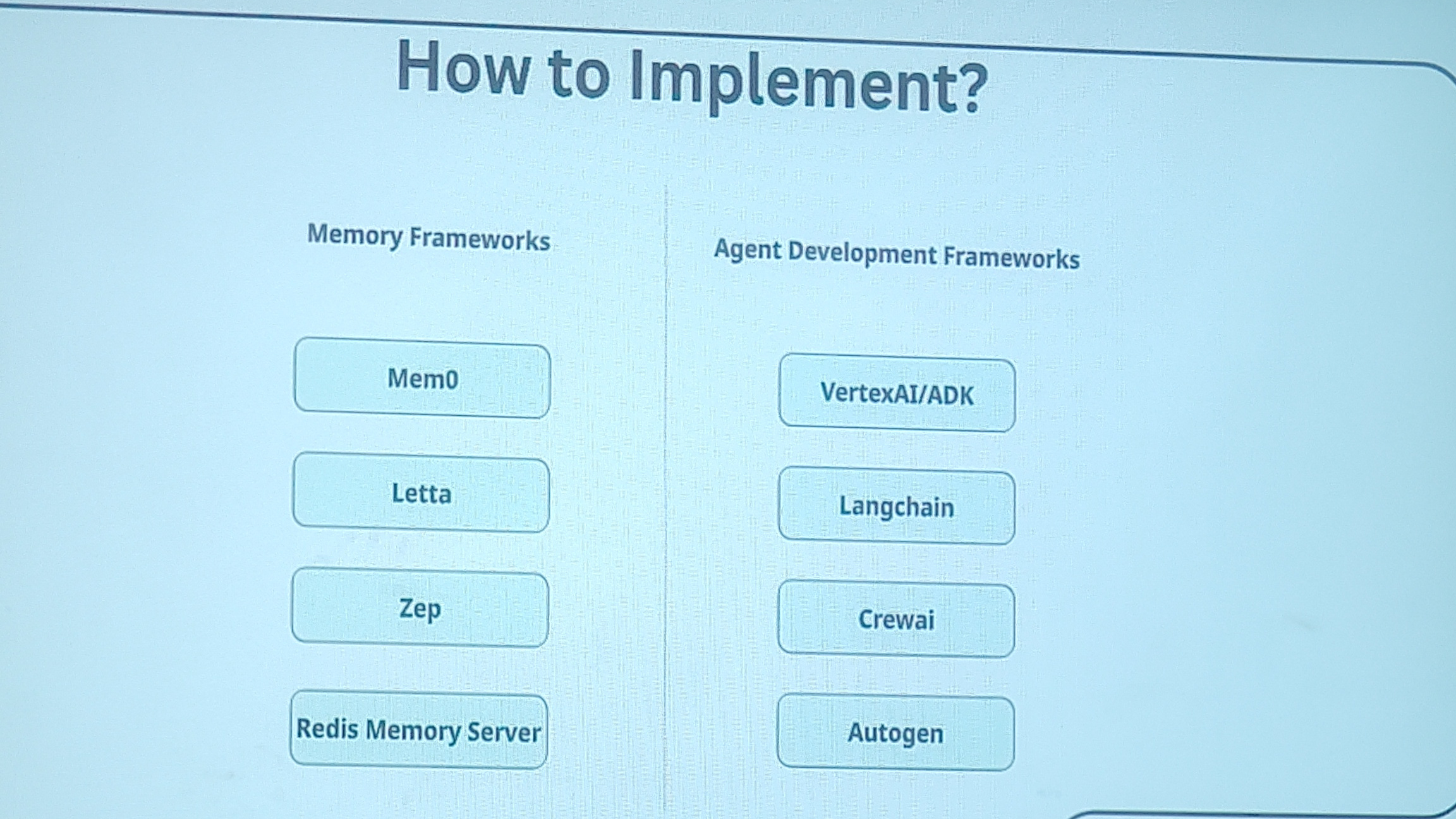

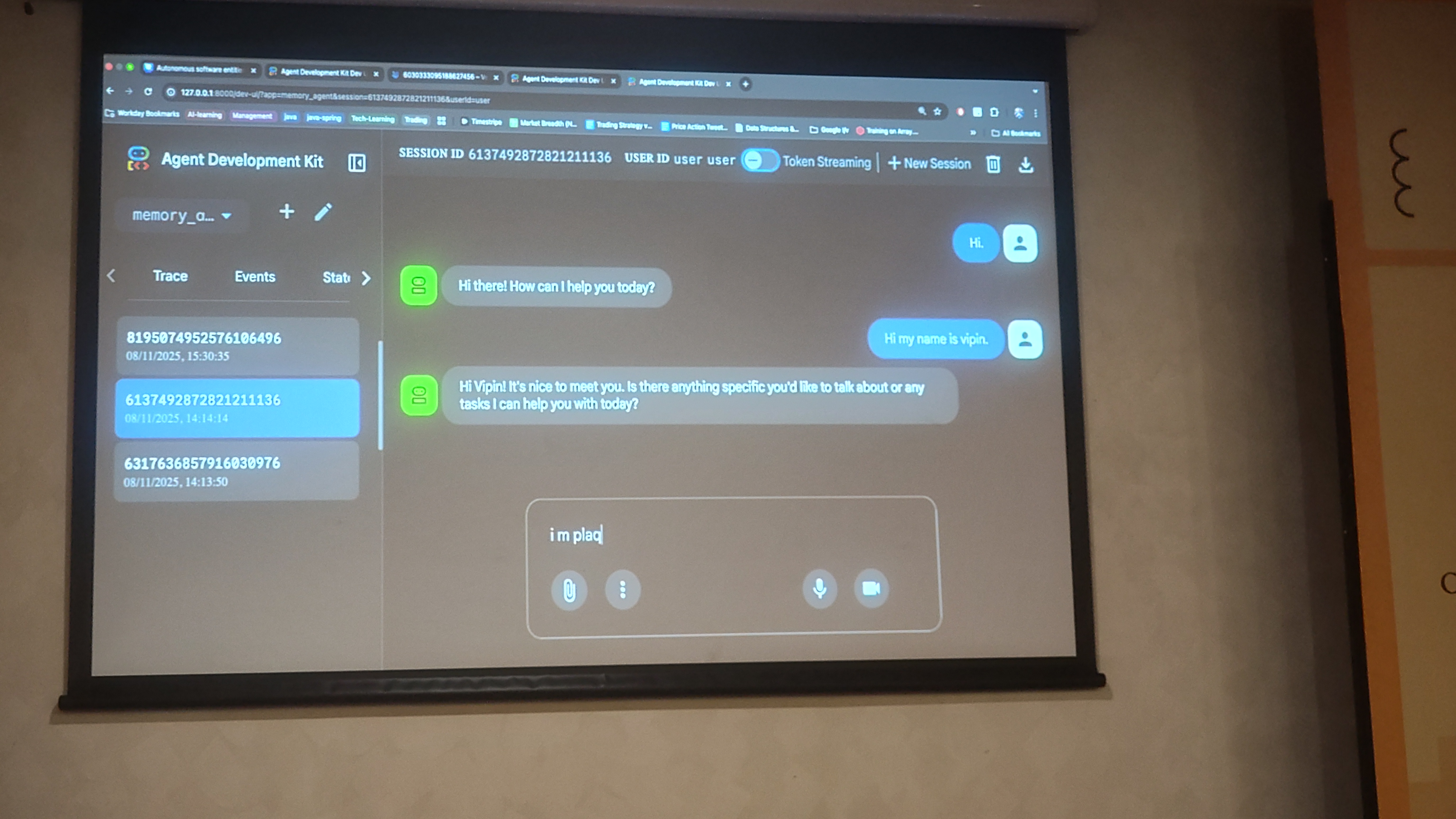

Google’s Vertex AI ADK: Practical Memory Solutions

Vipin then demonstrated how Google’s Agent Development Kit (ADK) addresses these memory challenges with practical tools and frameworks:

Vertex AI Memory Bank

Google has implemented a Memory Bank system within Vertex AI that provides developers with ready-to-use memory management capabilities. This system handles the complex task of storing, organizing, and retrieving information across agent interactions.

Advanced Memory Management Strategies

Prospective Reflection: Topic-Based Memory

Vipin introduced sophisticated memory management approaches that look forward rather than just backward:

Topic-Based Memory Organization:

- Automatically categorize and organize memories by topic

- Memory Merging: Combine related memories to create more comprehensive understanding

- Memory Addition: Incrementally build knowledge without redundancy

Retrospective Reflection: Retrieval Refinement

For improving the quality of memory retrieval, Vipin shared several techniques:

Preloaded Memory Systems:

- Agents can be pre-loaded with relevant domain knowledge

- This ensures consistent baseline knowledge across sessions

After-Agent Callback Mechanisms:

- Continuous refinement of memory based on interaction outcomes

- Learning from which memories were useful vs. which led to poor decisions

- Automatic updating of memory relevance and importance

The Path Forward

The key takeaway was that effective memory systems are not just about storing more information, they’re about storing the right information, organizing it intelligently, and retrieving it efficiently when needed. As AI agents become more capable and take on more complex tasks, sophisticated memory management will be crucial for creating systems that can truly understand context, learn from experience, and maintain coherent long-term interactions with users.

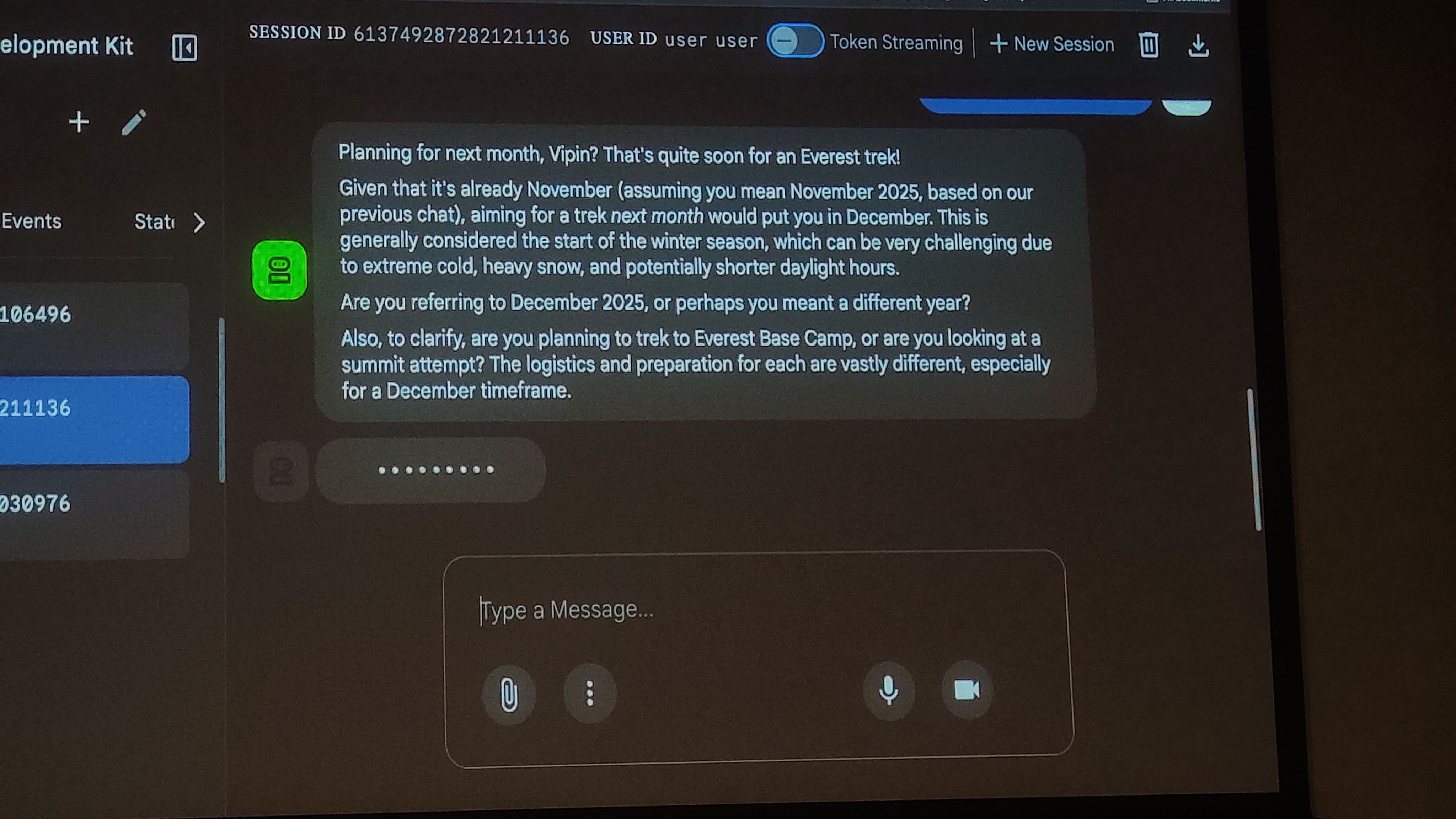

The fun demo about his not letting him go to Mt. Everest was the cherry on top of an insightful session!